Improve data quality: Top 7 strategies for better results

Improving data quality is essential for making well-founded business decisions. In this article, you will learn specific strategies for systematically increasing data quality and maintaining it at a high level in the long term.

Das Wichtigste auf einen Blick

- A common understanding of business terms within the company is essential.

- A continuous process of data quality management, including regular measurement and a robust data quality management framework, is crucial to ensure high data quality in the long term.

- Technological solutions such as data cleansing tools and monitoring tools help to maintain data quality, while training sensitises employees to the importance of data quality and enables them to actively contribute to quality assurance.

Definition and importance of data quality

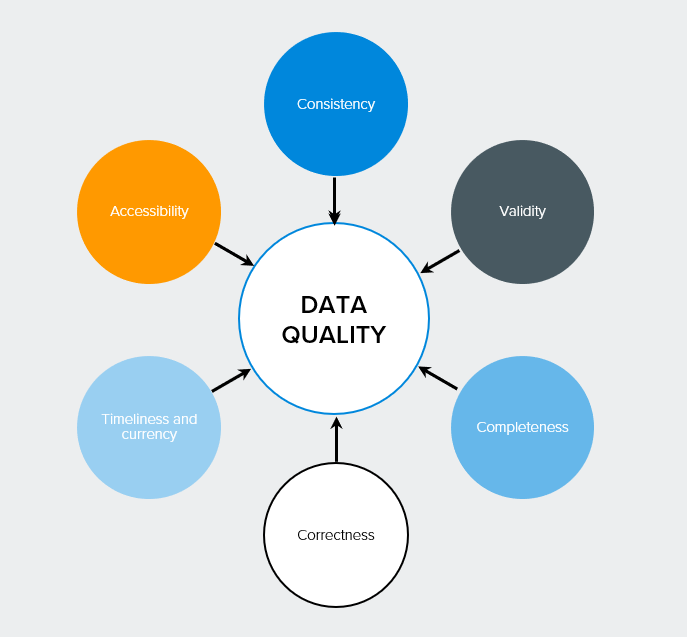

Data quality is crucial to the success of a company. It refers to the quality, accuracy and usefulness of data for its intended purposes. Think of data as the foundation on which your organisation is built. If this foundation is fragile, any decision or strategy based on it will be unstable. If there is no solid data management in place, the data collected can be worthless. As a result, the full potential is not realised. So it’s not just about having data, it’s about having the right data in the right quality at the right time.

A common understanding of data is essential to ensure that all areas of the company understand what certain business terms or data objects mean and what they do not. Only if all departments start from the same definitions and quality standards can a company truly be data-driven and realise its full potential.

Strategies for improving data quality

Improving data quality is not a one-off process, but a continuous process that requires strategic thinking and consistent action. Data quality should be understood as a continuous process, often described as a “closed-loop” or “data quality circle”. This approach ensures that the quality of your data is not only improved, but also maintained at a high level in the long term.

A structured approach to data quality management is the key to high data quality as a repetitive and flexibly adaptable process. This means that you not only react reactively to problems, but also take proactive measures to continuously improve the quality of your data. It is recommended that you select a pilot area to start with so that you can easily integrate data quality management into your day-to-day business. This will allow you to gain experience and refine your strategy before rolling it out across the organisation.

The involvement of stakeholders and consultants is another critical success factor. It helps to avoid misunderstandings and make changes in business processes transparent. By involving all relevant parties from the outset, you ensure that your data quality initiatives are widely supported and can be implemented effectively.

In the following sections, we will look at some specific strategies that will help you to improve your data quality in the long term.

Continuous measurement of data quality

Continuously measuring data quality is the compass that navigates your organisation through the sea of data. Understanding the issues and recognising their impact is critical to improving data quality. Without regular and systematic measurement, you will be in the dark and unable to respond effectively to problems or track improvements.

An audit to determine the status quo can be a useful starting point. Building on this, you should implement an automated process. The regular review and adjustment of data quality requirements is necessary to fulfil current needs. Remember: you can’t improve what you can’t measure. By defining clear metrics and targets for your data quality and reviewing them regularly, you create the basis for continuous improvement and can demonstrate the success of your efforts.

Establishment of a data quality management framework

A robust data quality management framework is the backbone of any successful data quality strategy. Data quality management comprises three main elements: Data Profiling, Data Cleansing and Data Management. Together, these elements form a holistic system that ensures the quality of your data from collection to utilisation.

Data profiling is a fundamental method for analysing the quality of data and enables the identification of problems such as inconsistencies, missing values and discrepancies within data sources. By using data profiling tools, you can gain a clear picture of the state of your data and initiate targeted improvement measures. The regular verification and updating of address details is a practical example of how you can improve data consistency.

By implementing a structured framework that covers all these aspects, you create the conditions for a sustainable improvement in your data quality.

Training and sensitisation of employees

The best technologies and processes are only as good as the people who use them. Training employees in the handling of data and its quality is effective in practice because, in addition to technical support, it provides employees with enormous assistance in their daily work. By sensitising your employees to the importance of data quality and providing them with the necessary skills, you create a culture of data excellence in your company.

It is important that teams are always up to date to ensure that they do not slack off and continuously improve data quality. Regular training, workshops and best-practice sharing can help to ensure that the topic of data quality remains firmly anchored in the minds of all employees. Remember: every employee who works with data is a potential data custodian.

By empowering and motivating everyone involved to contribute to data quality, you create a strong foundation for long-term success.

Technological solutions to support data quality

In today’s digital era, technological solutions play a crucial role in improving and maintaining data quality. To ensure the accuracy and completeness of data, the implementation of data cleansing and data validation tools is a common method. These tools are used to cleanse data and check its accuracy. These tools act as digital guardians that work tirelessly to keep your data clean and reliable.

In addition, data integration tools can help ensure that data from different systems and processes is consistent and standardised to improve analysis. Think of these tools as digital translators that ensure all your data sources speak the same language. This is especially important in large organisations with many different systems and departments.

besonders wichtig in großen Unternehmen mit vielen verschiedenen Systemen und Abteilungen.

It is important to note that real-time analyses and artificial intelligence require a stable database to function smoothly. Without high-quality data, even the most advanced AI systems will not be able to deliver reliable results. By investing in technological solutions to improve data quality, you are laying the foundation for future innovation and data-driven decision-making in your organisation, including business intelligence.

Use of data cleansing tools

Data cleansing tools are the Swiss army knives in your data quality toolkit. Data cleansing is the process of detecting and correcting errors and inconsistencies in data, including identifying and removing duplicates, incorrect values and inconsistent information. These tools work tirelessly to clean and optimise your data sets so that you can focus on analysing and using the data.

There are a variety of data cleansing tools on the market, each with its own strengths. Here are a few examples:

- OpenRefine: A popular open source tool for data cleansing that can convert data between different formats.

- Trifacta Wrangler: Uses machine learning to suggest data transformations and aggregations, which speeds up and simplifies the cleansing process.

- Melissa Clean Suite: Improves data quality in CRM and ERP platforms with functions such as data deduplication and data enrichment.

The choice of the right tool depends on your specific requirements and the complexity of your data landscape.

Integration and harmonization of data

In today’s networked business world, the integration and harmonisation of data from different sources is essential for a holistic view of your company. A unified analytics platform can ensure a consistent view of all company data in a kind of digital control centre where all your data streams converge and interact harmoniously with each other.

The introduction of a central hub, linked to relevant systems, can act as a single point of truth and automates data checking during input. This ensures that your integrated data is not only merged but also continuously checked for quality.

Monitoring tools for monitoring data quality

Continuous monitoring of data quality is like an early warning system for your data management. Automated tools for the continuous monitoring of data quality can detect inconsistencies, redundancies and missing data and report them via automated alerts if necessary. This enables you to react proactively to problems before they develop into major challenges.

Modern data quality tools make this possible:

- the connection and reading of source systems via APIs

- the efficient checking and cleansing of data

- Live tracking of the status of the data quality

- the creation of reports

This can take the form of a real-time dashboard for your data quality, which gives you an overview of the health of your data at all times. Data monitoring is used to monitor the status of the data. This status is then documented in a comprehensible manner.

Regular reviews and audits help to recognise and rectify data problems at an early stage. By using such tools, you create a culture of continuous improvement and vigilance with regard to your data quality.

Practical measures to avoid data quality problems

Now that we have looked at strategies and technological solutions, it is time to look at concrete practical measures that can prevent data quality problems from occurring in the first place.

Introducing a centralised hub, connected to relevant systems, can act as a single point of truth and automate data checking as it is entered. This ensures that your integrated data is not only merged, but also continuously checked for quality.

The following measures are important for improving manual data entry:

- Check for plausibility and form

- Verification of address details

- Input validation using reference values

- Duplicate search

These measures form the first line of defence against data quality problems.

One problem that is often overlooked is data silos, which often arise due to organisational structures that promote the separation of data in different departments. The data silos of individual departments often lead to inconsistent and inaccurate analysis results. It is important that organisations integrate their data to achieve accurate and consistent results. To combat this problem, it is important to develop an organisation-wide data strategy that transcends departmental boundaries and provides a unified view of company data.

In the following sections we will look at some specific approaches to implementing these practical measures using a guideline.

First-Time-Right approach

The first-time-right approach is like the “measure twice, cut once” principle in the world of data. The ‘first time right’ principle in data management aims to avoid incorrect or incomplete data as soon as it is captured. This means that you focus on quality right from the start and thus minimise the effort required for subsequent corrections.

Shortcomings in manual data entry can be reduced by measures such as intelligent input masks and input validations. These can perform plausibility and form checks during data entry. A practical way to implement the ‘first time right’ approach is to use user-friendly front-ends such as Microsoft Excel for data entry. By providing intuitive and error-resistant input interfaces, you make it easier for your employees to capture high-quality data right from the start.

Remember: Every error prevented during data entry is a step towards better data quality and more efficient processes, which can also reduce costs. You can optimise this process even further with our tips.

Avoidance of data silos

Data silos are like isolated islands in your sea of data – they hinder the free flow of information and lead to inconsistent and incomplete views of your organisation. A strong corporate culture that encourages data sharing is crucial to prevent data silos from forming. It’s about creating a mindset where data is seen as a shared resource that benefits all departments.

Data silos should be broken down and integrated on a standardised analysis platform to ensure a consistent view of all company data. A centralised data warehouse can help avoid data silos by integrating data from different departments and making it accessible. Think of a data warehouse as a kind of digital library where all your company data is catalogued, organised and accessible to any authorised user.

The regular synchronisation of data between departments also supports data consistency and helps to identify and rectify discrepancies at an early stage.

Regular data quality checks

Regular data quality checks are like regular health checks for your data. Integrating data quality checks into daily business processes helps to identify problems at an early stage. Instead of viewing data quality as a separate task, it should be an integral part of your daily business processes. This enables continuous monitoring and rapid response to potential problems.

Regular data quality checks can help to identify and rectify long-term data problems before they have a major impact. Integration into business processes is necessary to eliminate long-term identified data quality deficiencies. Remember that data quality is not a one-off project, but an ongoing process. By carrying out regular checks and incorporating the results into your business processes, you create a cycle of continuous improvement that constantly increases the quality of your data.

Data governance as the key to long-term data quality

Data governance is the foundation on which long-term data quality is built. It is like a set of rules that ensures that data is managed consistently, reliably and securely. Organisations should establish clear responsibilities in a top-down approach to raise awareness of data quality at all levels. This means that the initiative for data quality must come from the management level and be carried through all levels of the organisation.

Data governance requires the distribution of responsibilities for data creation, maintenance and quality assurance, whereby data owners require decision-making competences. Data owners require decision-making authority and are supported by the technical expertise of the data stewards. This distribution of roles ensures that there are clear contact persons and persons responsible for every aspect of data management.

One goal of data governance is to find an optimal combination of preventive and reactive measures for the early detection of data problems. This means that you not only react to problems, but also take proactive measures to prevent data quality issues before they arise.

Summary

In this comprehensive guide, we have highlighted the critical importance of data quality to business success. From the definition and importance of data quality to practical strategies for improvement, technology solutions and data governance, we have covered all the key aspects of effective data quality management. We learnt that data quality is not a one-off project, but a continuous process that needs to be integrated into daily business operations.

Implementing robust data quality management may seem like a challenge at first, but the potential benefits are immense. Improved decision making, increased efficiency, cost savings and a competitive advantage are just some of the rewards you can reap. Remember, in today’s data-driven world, quality data is not just an advantage, it’s a necessity. By implementing the strategies and best practices outlined in this guide, you’ll lay the foundation for a future where your data is not just a resource, but a true asset to your organisation. Make data quality your top priority and you will reap the rewards in the form of better business results and a sustainable competitive advantage.

Frequently asked questions

What are the most common causes of poor data quality?

The most common causes of poor data quality are manual input errors, outdated data, data silos, a lack of standardisation and insufficient data validation. A lack of employee training and the absence of clear data governance guidelines can also lead to quality problems. It is important to identify these causes and take appropriate measures to improve data quality.

How can I measure the ROI of investments in data quality?

You can measure the ROI of investments in data quality by looking at factors such as reduced error rates, improved decision making, increased productivity and cost savings. Improvements in customer satisfaction and sales can also be indirect indicators of ROI.

What role does artificial intelligence play in improving data quality?

Artificial intelligence plays an important role in improving data quality by using it for automated data cleansing, anomaly detection and predictive analyses. AI algorithms can uncover hard-to-recognise patterns and inconsistencies, improving the efficiency and accuracy of data quality processes.

How can I sensitise my employees to the topic of data quality?

To sensitise your employees to the topic of data quality, you can conduct regular training sessions, workshops and internal communication campaigns. Show concrete examples of the effects of poor data quality and establish a culture of data responsibility. Reward employees who are committed to improving data quality.

How often should data quality checks be carried out?

Data quality checks should be performed at different frequencies depending on the type and use of the data. Critical business data should ideally be monitored continuously, while less critical data may be reviewed weekly or monthly. It is advisable to implement automated checks and perform regular manual audits to avoid problems.